Yep, believe it or not, they came to stay!

In this page, I will share my experience and the projects I have developed through the Udacity Self-Driving Car Nanodegree Course.

Since when I started Trekken, I got involved with the development of some AI algorithms to improve the detection of aggressive maneuvers for our smartphone application. After revised several algorithms I got really excited about all the possibilities AI brings! And, of course, one of the mainstream application is Self-Driving Cars.

Before we dive in, it is important to explain why people are so excited about it. You have to take into account that driving a car is not a formal problem, in other words, it is practically impossible to exhaustively formalize the real world for a computer program to drive a car perfectly. That’s why it is a major milestone; it is far more complicated than teaching a computer to play chez (which already is a great milestone for computer science). So, autonomous driving has to behave like humans in some aspects, which means, be able to improvise when facing a scenario that has never been exactly trained for. This is why AI algorithm fits perfectly for this job.

After a few months of research about the subject, I came across the Udacity course about Self-Driving Cars. For a non AI/Robotics specialist like myself, it is really hard to compile together all the algorithms necessary to understand the state of the art of Autonomous Driving. So, I decided to enroll myself into the Udacity Nanodegre Program to learn more about this subject. The course consists of three terms of 3 months each; I just finished the second term. Below, I will share some interesting projects I have developed during the course.

Traffic Sign Classification

This project consists of an elaborate neural network to classify German Traffic Signs. It uses a custom variation of LeNet Neural Network extended with two extra Convolution Layers to identify and classify Traffic Signs from the German Traffic Sign Dataset.

The main goals of this project are to develop a neural network that gets as input a raw image of a German Traffic Sign and outputs a label that correctly classifies it.

Link: https://github.com/rtsaad/CarND-Traffic-Sign-Classifier-Project

Self-Driving Car Behavioral Cloning

Development of a Convolution Neural Network (CNN) to clone human behavior for self-driving purpose. The main goals of this project are to develop an End-To-End Neural Network that gets as input the video output from a camera positioned in front of the car and outputs the car steering angle.

Link: https://github.com/rtsaad/CarND-Behavioral-Cloning-P3

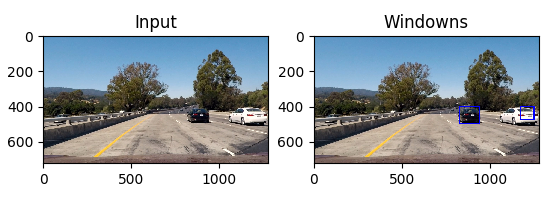

Vehicle and Lane Detection

This project consists of a python script to detect vehicles and lane lines from an image for self-driving purpose. It uses Image Recognition techniques to identify objects and draw contour boxes over the vehicles on the original image. The script itself can work over one or a sequence of images (i.e. video).

Links: https://github.com/rtsaad/CarND-Advanced-Lane-Lines

https://github.com/rtsaad/CarND-Vehicle-Detection

Localization

This project consists of a c++ implementation of a Particle Filter to estimate the location (GPS position) of a moving car. This project improves the estimations by sensing landmarks and associating it with their actual positions obtained from a known map.

The main goals of this project are to develop a c++ Particle filter that successfully estimates the position of the car from the Udacity Simulator.

Link: https://github.com/rtsaad/CarND-Kidnapped-Vehicle-Project

Following a Path

This project consists of a c++ implementation of a Model Predictive Control (MPC) to control the steering angle and the throttle acceleration of a car using the Udacity self-driving simulator. The main goal of this project is to develop a c++ MPC controller that successfully drives the vehicle around the track (Udacity simulator).

Link: https://github.com/rtsaad/CarND-MPC-Project